Update Note, 2007-02-03

The results you get may be different from what I show here. Someone reported to me that they ran these on their system and didn't see the problem, but they were using a custom beta version of .NET. I have not tested this on Vista yet. I also removed all the debug file junk from these ZIP files that was causing them to be so large.

Update Note, 2007-04-01

It has been determined that running this program with MS Visual Studio 2005 Pro installed will not show as much memory use as indicated below. It appears that .NET is tuned for better performance by something installed in VS2005. A plain install of .NET 2.0 on a fresh OS install, including Vista, should show the behavior shown below.

I also got myself involved in a general .NET vs. Win32 performance discussion of this topic here:

http://discuss.joelonsoftware.com/default.asp?dotnet.12.463019.13

This blog was found which shows what this problem can do in a large application deployment:

http://weblogs.asp.net/pwilson/archive/2004/02/14/73033.aspx

Update Note, 2007-10-25

Added a new topic Performance .NOT which looks at claims made on MSDN about .NET memory performance.

Also, here is an interesting article from the IEEE on this subject: C# and the .NET Framework: Ready for Real Time? (alternate pay-for link)

Is .NET suitable for real-time projects?

I chose Borland C++ Builder for my "soft" real-time machine vision applications because it provided the following advantages:

- Rapid Application Development (RAD) for the GUI.

- The power of C++ for vision processing.

- Extensive Visual Control Library (VCL) with various container objects that are much faster than the equivalent STL containers.

- Close integration between the C++ processing code and the GUI.

Had I decided to use Microsoft tools back in 1998 for my projects, I would have used Visual C++ for the processing code and Visual Basic for the GUI. Visual C++ was not a true RAD system. Yes, there are wizards for design of the initial GUI, but once you have a design just try to change it. Visual Basic was the only true Microsoft RAD development language. This combination would also have introduced problems with trying to interface the two languages and make them work together. I just didn't want to go in that direction.

Now that Visual Studio 2005 has been released, Microsoft has a fully RAD C++ development environment. I can now use C++ for the real-time image processing and the GUI can now be built in C++ using RAD methods. The only drawback is that the C++ RAD environment is managed code and will run slower than native code. It is possible to put the image processing into a Win32 service module and interface to it using the managed code GUI, but this will not be as easy as a fully Win32 RAD environment such as Borland C++ Builder.

As part of my evaluation process, I decided to build my standard Cache Test application in both C++ and C# using VS 2005. You can download the source and .NET 2.0 versions of these programs here:

dNET_Cache_Test.zip 36 KB ZIP file, the managed C++ version.

dNET_CS_Cache_Test.zip 20 KB ZIP file, the managed C# version.

You may need to install these into your VS 2005 project directory to get them to run. They will not run on .NET 1.1, they only work on .NET 2.0.

Results of .NET Cache Test

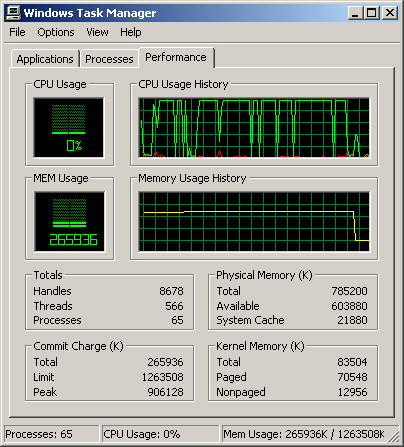

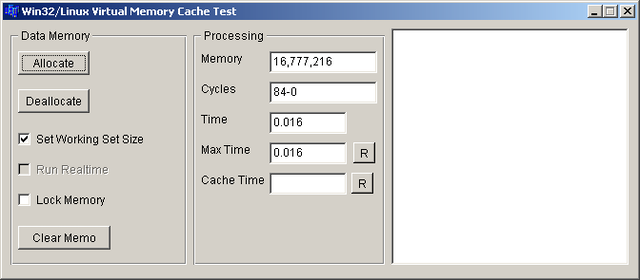

Just for reference, here is the Win32 version running on a Pentium 4 2.8 GHz CPU. The time indicated is what it takes to increment all the bytes in a 16 MB block of memory.

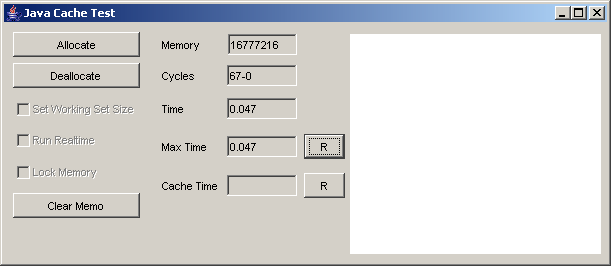

This example shows how running this test in a Java virtual machine slows down the speed quite a bit. This was running in the debugger which also added more delays.

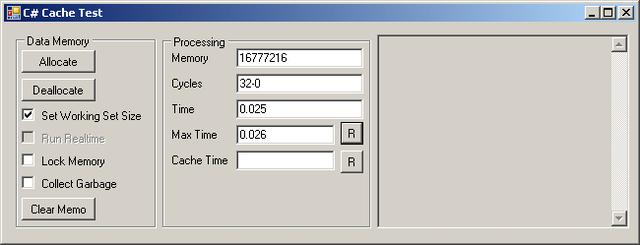

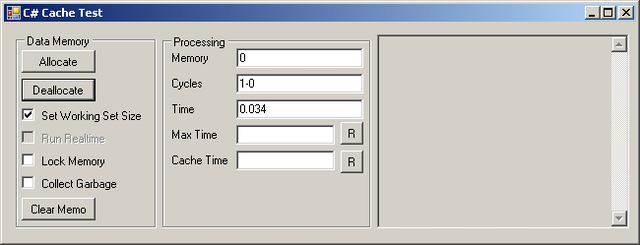

Here is the equivalent C# time running in release mode outside of the VS 2005 environment. The C++ time is not much different. As you can see, managed code is almost 2 times slower than native Win32 x86 code.

All the following tests were run with (force) Collect Garbage off.

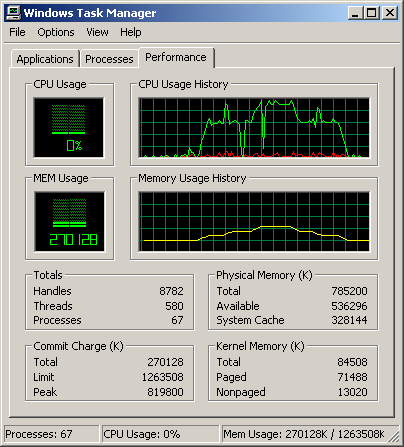

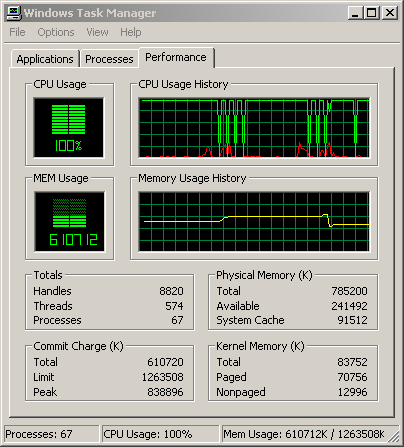

Now suppose we run the Win32 version of Cache Test and allocate a lot of memory and then release it. This is what we see in the Task Manager for memory use. The memory gets used and then gets freed until we are back where we started.

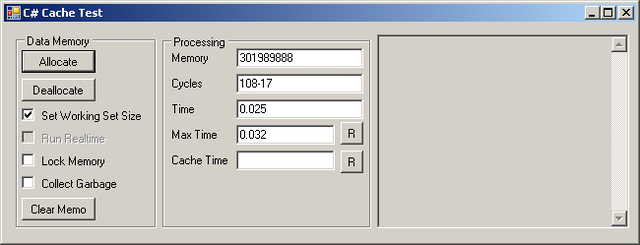

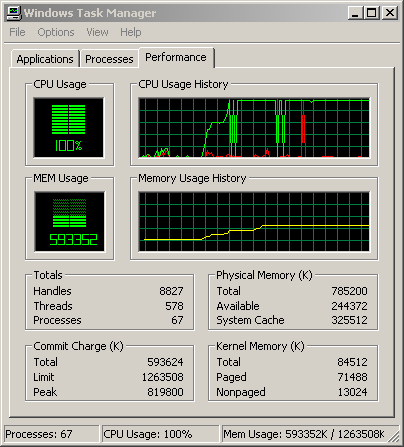

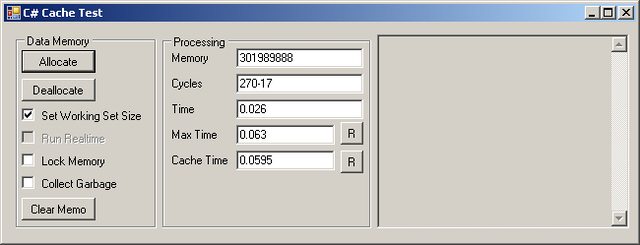

Now try this with the .NET version. We allocate 300 MB of memory.

So far, so good. We have about 300 MB of programs in memory and have used up an additional 300 MB for the Cache Test program.

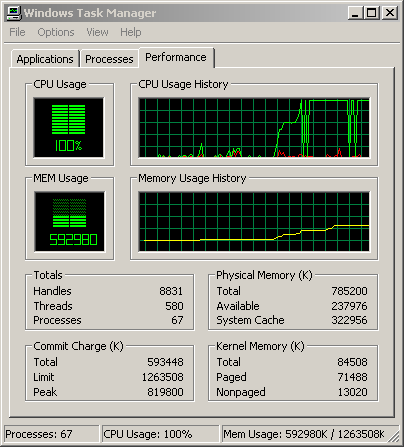

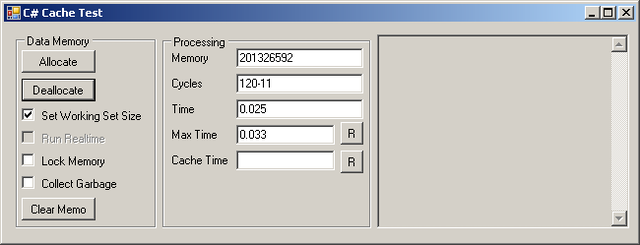

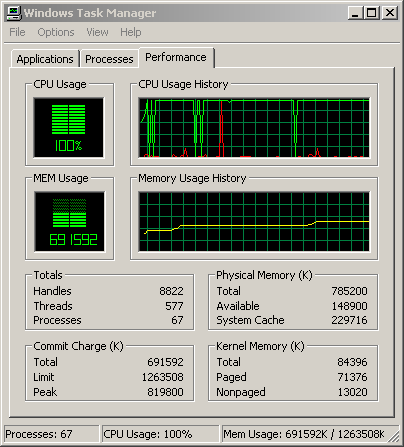

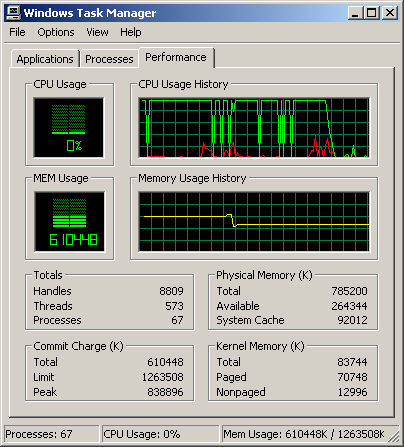

Now we drop the allocation back to 200 MB.

Now this is odd - we still have the full 300 allocated and after waiting a while for garbage collection we see no decrease.

Well, perhaps if we go back up to 300 MB, it will use the memory we released.

Well, I guess that assumption wasn't very good. We now used up an additional 100 MB.

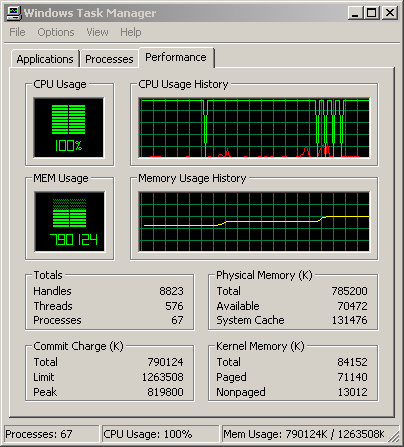

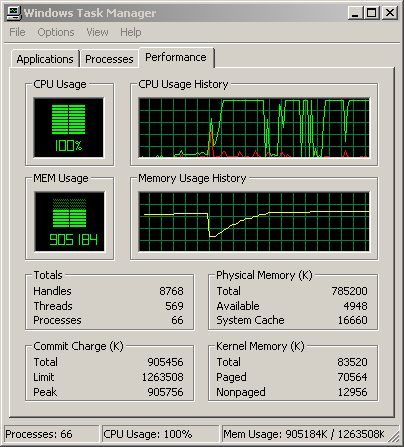

After several more cycles of releasing and allocating 100 MB we start to run out of system memory. At this point, Windows will be throwing other programs out of memory to make room for our application.

If we keep doing this, eventually the garbage collector will release some memory and start over, but not until we have run well over the limits of physical memory.

You might think the garbage collector would work if we go back to 0 MB allocated. Perhaps it just saw the CPU was too busy and decided not to run.

Well, that is not the case. We still have an excess of 300 MB allocated while the program is back to zero.

We now allocate some more memory. This shows the limit right before the garbage collector will run and release some memory. At this point the system is very low on memory and will start to run programs slowly because there is heavy virtual memory use.

Only when we exit the Cache Test application, do we get all the memory back.

I will let you draw your own conclusions on how this will affect any real-time processing you are running. I don't think I will be switching to .NET any time soon for either real-time or GUI use.